This is the best summary I could come up with:

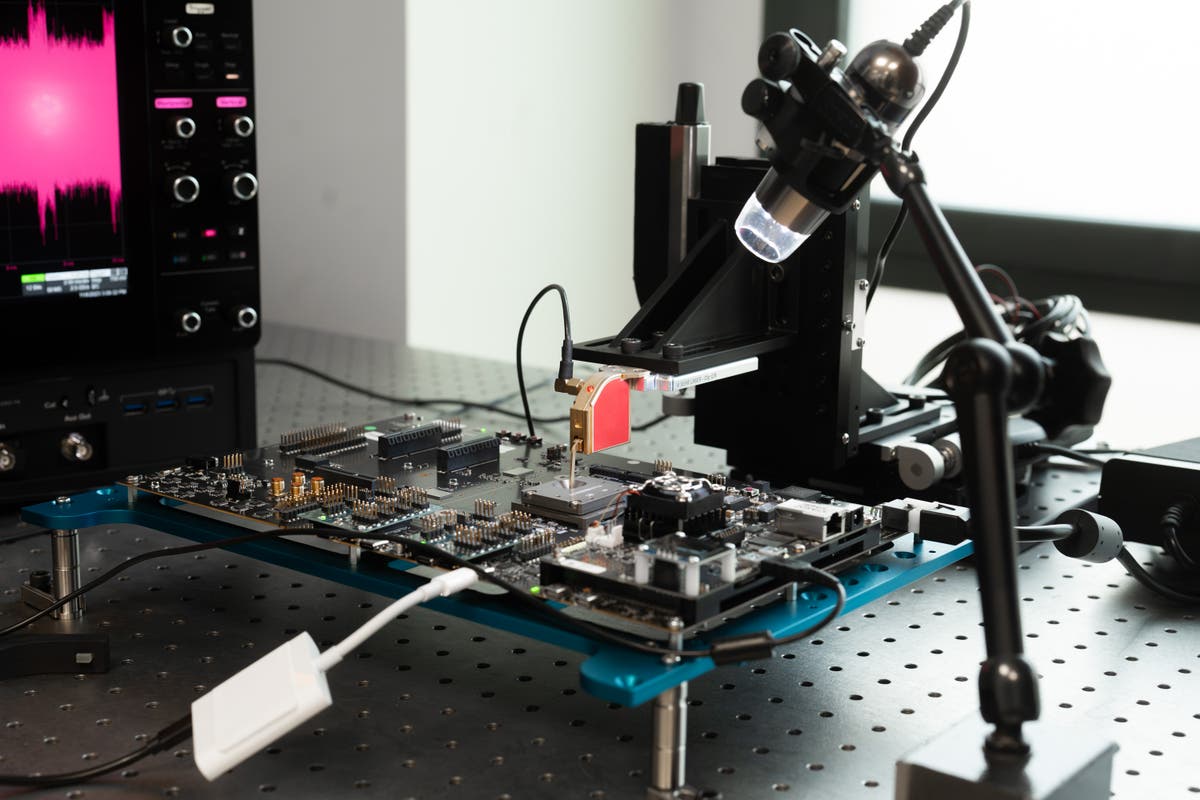

Using a vast array of technology including lasers are finely tuned sensors, they are trying to find gaps in their security and patch them up before they even arrive in the world.

The actual chip doing the encryption can show signs of what it is doing: while processors might seem like abstract electronics, they throw out all sorts of heats and signals that could be useful to an attacker.

But they are up against highly compensated hackers: in recent years, there has grown up to be an advanced set of companies offering cyber weapons to the highest bidder, primarily for use against people working to better the world: human rights activists, journalists, diplomats.

But recent years have also seen it locked in an escalating battle: Lockdown Mode might have been a breakthrough of which it is proud, but it was only needed because of an unfortunate campaign to break into people’s phones.

It is not the kind of difficulty that comes even with other security work; those stealing passwords or scamming people out of money don’t have lobbyists and government power.

The kind of highly targeted, advanced attacks that Lockdown Mode and other features guard against however are costly and complicated, meaning they will often be done by governments that could cause difficulties for Apple and other technology companies.

The original article contains 2,167 words, the summary contains 219 words. Saved 90%. I’m a bot and I’m open source!

Downvote me if you must but what if Apple accidentally became the Privacy community’s greatest ally? I know it can’t happen bc they’ll always keep a back door for their data mining

A back door is not what apple uses to collect data, that’s very very different from sending analytics.

Apple already outperforms google by 3x in terms of privacy points collected.

Wanna talk about backdoors? Check out the exploit list for android phones compared to iPhones. Nuff said.

How does a multimillion dollar company beat a trillion dollar company ath their own security game? Also stop suing Corellium is a good start, don’t sue when you get caught lying, do better.

Idk but there’s plenty of other android os that are more secure than what google puts out. And they make substantially less.

Google hasn’t been the same since the switch regarding “evil” and “ethics”.

Anyone can see that.

Can you post some reliable evidence or are you talking out of your ass, now you’re just looking like an angry iBoy

The pragmatic approach used for comparing Android and iOS helps to understand that Android is more susceptible to security breaches and malware attacks.

https://www.sciencedirect.com/science/article/abs/pii/S1574013721000125

Across all studied apps, our study highlights widespread potential violations of US, EU and UK privacy law, including 1) the use of third-party tracking without user consent, 2) the lack of parental consent before sharing personally identifiable information (PII) with third-parties in children’s apps, 3) the non-data-minimising configuration of tracking libraries, 4) the sending of personal data to countries without an adequate level of data protection, and 5) the continued absence of transparency around tracking, partly due to design decisions by Apple and Google. Overall, we find that neither platform is clearly better than the other for privacy across the dimensions we studied.

You had to call me a 3rd grade name just to feel like you won.

Pretty sad.

Like i care, strong comeback though

Pretty obvious you do.

-doesn’t agree with/like what somebody else says: immediately jump to insults.

Come on. Let’s not insult people because we don’t like what they say. We can do better. We should do better. If people just got along with others who are different or have different interests, the whole world would be a better place.

Genuinely, I am asking you to reevaluate how you respond, and maybe just try to be a little nicer to others. It costs nothing and makes the world a little bit better every time.

Hell, if they legitimately stopped their data collection, that would be enough to tip me into iOS until a truly good linux phone in my price range happens.

I’m curious, do you have a source for them having a backdoor and mining data?

Pure speculation based on things I saw in the news over the years

Wow OK, well that doesn’t have any value then. It’s best not to spread rumours since eich behaviour it can easily spread to other, more important, issues in society.

What’s the big deal? Google already do this to Android AND they also host Hackathons where they invite people to do this and reward those who do.

Plus they have an ongoing bug bounty program so at anytime you can submit a bug/hack and get paid.

Apple don’t have a history of doing this at all. This is literally the first time they are doing it because of the bad PR from Pegasus.

It shouldn’t be applauded. They should be roasted for not having done this sooner

I haven’t heard about google testing hardware based attacks on their chips, which I suppose could be caused by android running on a wide variety of chips instead of a few home-developed ones. Next to that Apple has had a bug bounty program for ages, that pays well and covers a wide range of attacks. Not hosting open hackathons has perhaps something to do with public brand image, but Apple shouldn’t be discredited regarding rewarding the findings of bugs and exploits.

Not sure about their own chips but definitely hack the daylights out of Android.

Apple has a bounty program but it doesn’t work. I’ve read multiple stories over the years of Devs who submitted show stopping bugs and never got anything back from Apple. And they take MONTHS to release a fix.

The Google Security Team found a massive hole in iOS, reported it to Apple, and after months of waiting with no feedback or fix released, they published it openly. Only THEN did Apple suddenly acknowledge it and released a fix.

Apple are the biggest hypocrites. They claim to be private and not collect data but literally everything you do on your phone they can see and collect. Everything in iCloud is on their servers. All your browsing history they can see in Safari.

The only difference between them and Google is that they claim not to sell the data. But as we know Edward Snowden told us that the CIA/FBI etc have full access to all the servers of the Big Tech companies under the Patriot Act. They can decrypt and see your data anytime.

So in other words not really private. None of them are.

Sadly the same thing has been happening on the android side (a quick google search seems to confirm this). Possible exploits reported but not patched in a timely manner. In general I feel like the Apple bug bounty problem has been swift, although indeed failing from time to time to reward an original reporter. I have not been keeping a close eye on the android side but I imagine the same has been happening. Apple has started to offer e2e encryption on iCloud data blocking even CIA/FBI access. And next to that, seeing I’m based in Europe (and so my data should too) I don’t feel like the patriot act has any impact on me.

I’m assuming that Big Tech holds the encryption keys which they give the government access to in order to decrypt your data. The point of the Act is to allow law enforcement to be able to legibly access data in order to investigate possible terrorists.

It wouldn’t be a very useful Act if they don’t hold the decryption keys. So they definitely do.

And Snowden is still wanted, which means the info he leaked is accurate.

I too am in the EU but I don’t trust any government. I’m sure they also can get the access from the US if they really want to. No one’s data is truly safe if you’re using Big Tech.

Having someone else with the decryption keys is not how e2e works. E2E is a pretty solid and proven system, and I have yet to find a solid source about “big tech holding the keys”.

Search for Project Prism

Here’s one exhibit:

"The National Security Agency has obtained direct access to the systems of Google, Facebook, Apple and other US internet giants, according to a top secret document obtained by the Guardian.

The NSA access is part of a previously undisclosed program called Prism, which allows officials to collect material including search history, the content of emails, file transfers and live chats, the document says.

The Guardian has verified the authenticity of the document, a 41-slide PowerPoint presentation – classified as top secret with no distribution to foreign allies – which was apparently used to train intelligence operatives on the capabilities of the program. The document claims “collection directly from the servers” of major US service providers."

Source: https://www.theguardian.com/world/2013/jun/06/us-tech-giants-nsa-data

That was back in 2013. I’m sure the tool is even more advanced now. This is why Snowden fled - he exposed this.

Yeah as the previous commenter said, e2e encryption just doesn’t allow anyone to access the data but the owner of the keys. E2E is prized because of this. There are two keys: public and private. If you and I are both using iMessage, you send a message to me that is encrypted on your device using your private key, and sent to my device using my public key. Only you and I can ever see those messages unless someone gets access to one of our phones.

Now, iCloud is backed up to apples servers. If you have iMessage backup enabled, it’s possible, and maybe even likely tbh, that Apple has access to recent messages. iMessage is also (potentially, but again in this case, I’d argue likely) susceptible to man-in-the-middle attacks. Because you need my public key for our communication to be decrypted, if you receive some else’s public key instead, they now have your messages and I don’t.

The DEA and FBI have both had documents leaked mentioning they can’t track or trace or unencrypt iMessage. The same is true for WhatsApp or any e2e messaging service.

Again, this is all contingent on not using iCloud backup. If you use iCloud backup, then the encryption keys used can be accessed with the proper authority. I assume (but haven’t looked into it) that Google is the same. If you don’t backup your e2e encrypted content, it cannot be decrypted without the private key only you have access to. Of course iCloud backup is enabled by default, so for the vast majority of Apple users, their messages and information are all available anyway so none of this matters.

In addition, iMessage uses a directory lookup to find the correct public key for your recipient. This information Apple does keep (I am unsure how long). What this means is that law enforcement (with a warrant) can see who and how often you are messaging. That alone is information we really don’t want people having.

So the moral is: if you don’t use backups for e2e encrypted communication, your content cannot be read externally. It’s just the way cryptography works.

This doesn’t mean that companies do not share information with law enforcement. There is a lot of unencrypted information Apple, Google, et al will share with government agencies when a warrant or subpoena is served. In addition to that, your phone provider will share information with them. In addition to that any SMS or MMS messages sent from any device will lack encryption and be easily discoverable.

Tl;dr: e2e encryption is secure, as long as you follow best practices and have an idea of how encryption works.

Isn’t this just pentesting?

But is also marketing.

Definitely applauding them on going hard and testing their stuff.

I’m thinking of how Sony and Nintendo had piracy issues that completely ruined their hardware sales. Then again, they can kinda get away with it in the short run by continuing to release quality software.

Apple doesn’t have that luxury as they’ve cemented themselves as making good bundled hardware+software. If any part of that gets crippled, they’re fucked.

Are there numbers showing any meaningful harm to Sony or Nintendo?

deleted by creator