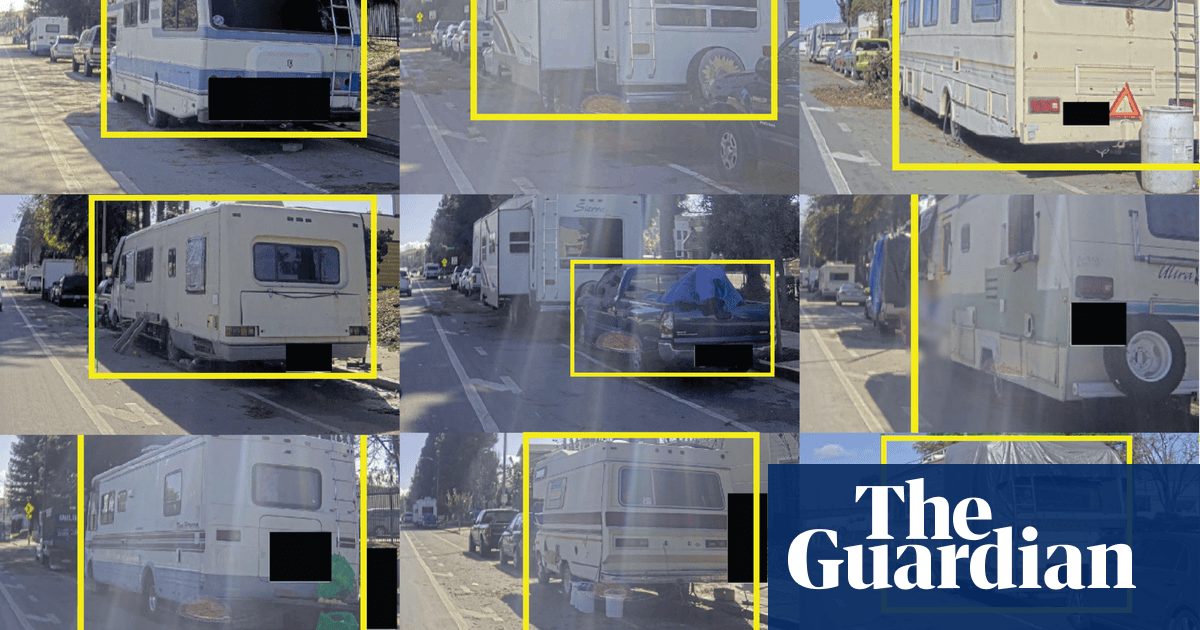

Last July, San Jose issued an open invitation to technology companies to mount cameras on a municipal vehicle that began periodically driving through the city’s district 10 in December, collecting footage of the streets and public spaces. The images are fed into computer vision software and used to train the companies’ algorithms to detect the unwanted objects, according to interviews and documents the Guardian obtained through public records requests.

They’ve already been using it to give probably cause and as evidence that all black people are the same and therefore guilty. I’m referring to facial recognition

In terms of legal precedent this may be a good thing in the long run.

The software billed as “AI” these days is half baked. If one or more law enforcement agencies point to the new piece of software the city deployed as their probable cause to make an arrest it won’t take long for that to get challenged in court.

This sets the stage for the legality of the software to be challenged now (in half baked form) and to set a legal standard demanding high accuracy and/or human assessment when making an arrest.

This kind of software is already illegal in the EU. AI cannot be used for surveillance or to make decisions about people or arrest them.

https://www.europarl.europa.eu/topics/en/article/20230601STO93804/eu-ai-act-first-regulation-on-artificial-intelligence

Good for the EU I guess?

I was talking about it in the US, where the article is focused.

Yes, but the EU is setting legal precident here that American legislation should follow.

Oh boy, I have some news for you …

Defeatism only helps the thugs who benefit from chaos.

I think you may be miscalculating the opinion of the American public. “It’s European law” isn’t exactly a selling point for a lot of folks.

I think you’re more optimistic than I am about a conservative appeals court judge being able to first understand that the technology works very well, then actually give a shit if they do.

I’m arguing against the technology. I believe that the decision to make an arrest should fall to a human being and that individual should be allowed to override a bad call by the shit being billed as AI.

There’s a real possibility that law enforcement agencies may try to foist responsibility for decisions onto software and require officers to abide by the recommendations of said software. That would be a huge mistake.