Want to wade into the snowy surf of the abyss? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid: Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret.

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

The post Xitter web has spawned soo many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

(Credit and/or blame to David Gerard for starting this.)

Slopocalypse Now h/t The Syllabus

For context, Kunzru wrote the novel Red Pill a few years back.

Candace is a pioneer. Following her, we are exiting the age of the public sphere and entering a time of magic, when signs and symbols have the power to reshape reality. Consider the “Medbed,” a staple of QAnon-adjacent right-wing conspiracy culture. Medbeds are one of the many things about which “they” are not telling “you”; they can supposedly regenerate limbs and reverse aging. How evil would you have to be to deny such a boon to We the People? In late September, Trump posted an AI-generated video of himself promoting the scam, promising that every faithful supporter would be given a card that would give them access to this magic technology. Trump posted it because it made him look good, a leader healing the sick, but also because it is a way to hyperstition a version of this fiction into reality. No one will really be cured, of course, because the Medbed doesn’t exist. Except now it is someone’s job to make sure it does: The president is a powerful magician who never tells a lie, so some loyal redhats will have to be given cards that let them lie down in some kind of cargo-cult version of a Medbed. Perhaps it will be a job for TV’s own Dr. Oz, who has crossed to the other side of the screen as the administrator of the Centers for Medicare & Medicaid Services.

God we live in the dumbest possible world.

This is not art as critique. Critique is just sincere-posting, dutifully pointing out yet again that the Medbed isn’t “real.” Art can mess with our masters in ways we don’t yet fully understand.

I hope so, Jesse Welles getting on the Colbert and playing Red shows some people are moving in that direction, but is also definitely sincere-posting, and ultimately that kind of performance just doesn’t pay the bills like if he went Truck Jeans Beer. Eddington seems to have gotten under some people’s skins in an interesting way… And I’m skeptical that /any/ novel would have any impact or reach outside the NYT class, what with having to actually read something.

Hyperstition is such a bad neologism, apparently doubleplus superstition equals self-fullfilling prophecy (transitive)? They don’t even bother to verb it properly… Nick Land got a nonsense word stuck in his head and now there’s a whole subculture of midwit thought leader wannabes parroting that shit.

If only people had stuffed Land in a locker instead of buying drugs of him.

Nothing makes you grounded like stuffing Land in a locker.

Fun detail about this hype about ‘innovation’ (there was not just a poster but also an email) aircraft carriers use physical chits and a table for their planning. (Bonus, cant be hacked or emped). So gonna be fun to see if he will try to get rid of that and we might see a carrier sunk in the venezuelan/taiwan wars.

Old Man Stallman comes out swinging against ChatGPT specifically, adding it to the long long list of stuff he doesn’t like. For some reason HN is mad at this, as if RMS saying slop is good actually would convince anyone normal to start using it

The comments are filled with people thinking they are smart by questioning what is human intelligence and how can we trust ourselves. The kool-aid is quite strong. I am no Stallman lover and have bumped into him more than once locally but I do think the fella who started much of common computing tools and was part of MIT AI lab for a bit may know a thing or two. Or maybe I have been eating my toe too much.

Questioning the nature of human intelligence is step 1 in promptfondler whataboutism.

The orange-site whippersnappers don’t realize how old artificial neurons are. In terms of theory, the Hebbian principle was documented in 1949 and the perceptron was proposed in 1943 in an article with the delightfully-dated name, “A logical calculus of the ideas immanent in nervous activity”. In 1957, the Mark I Perceptron was introduced; in modern parlance, it was a configurable image classifier with a single layer of hundreds-to-thousands of neurons and a square grid of dozens-to-hundreds of pixels. For comparison, MIT’s AI lab was founded in 1970. RMS would have read about artificial neurons as part of their classwork and research, although it wasn’t part of MIT’s AI programme.

Is there even any young people we could plausibly call whippersnappers on orange site anymore, it feels like they’re all well into their 30s/40s at this point.

I miss n-gate but that was what, 8 years ago.

But in fairness to actual whipper snappers, and to your point, the '56 Dartmouth Workshop forward privileged Symbolic AI over anything data driven up through the first AI winter (until roughly the 90s and the balance shifted) and really warped the disciplines understanding of its own influences and history - if 70s RMS was taught anything about Neural Nets, it’s relevance and importance would probably have been minimized in comparison to expert systems in lisp or whatever Minsky was up to.

In college I took an AI class and it was just a lisp class. I was disappointed. Also the instructor often had white foam in the corners of his mouth, so I dropped it.

My college used the green Russell Norvig text, which had (checking…) 12 pages on neutral nets out of 1000 pages. I liked the class well enough, but we used Java 1.3 and lisp would have been better.

I miss n-gate but that was what, 8 years ago.

Only four (August 2021).

It might have already been posted here, but this Wikipedia guide to recognizing AI slop is such a good resource.

A fairly good and nuanced guide. No magic silver-bullet shibboleths for us.

I particularly like this section:

Consequently, the LLM tends to omit specific, unusual, nuanced facts (which are statistically rare) and replace them with more generic, positive descriptions (which are statistically common). Thus the highly specific “inventor of the first train-coupling device” might become “a revolutionary titan of industry.” It is like shouting louder and louder that a portrait shows a uniquely important person, while the portrait itself is fading from a sharp photograph into a blurry, generic sketch. The subject becomes simultaneously less specific and more exaggerated.

I think it’s an excellent summary, and connects with the “Barnum-effect” of LLMs, making them appear smarter than they are. And that it’s not the presence of certain words, but the absence of certain others (and well content) that is a good indicator of LLM extruded garbage.

Also, you can one-step explain from this guide why people with working bullshit detectors tend to immediately clock LLM output, vs the executive class whose whole existence is predicated on not discerning bullshit being its greatest fans. A lot of us have seen A Guy In A Suit do this, intentionally avoid specifics to make himself/his company/his product look superficially better. Hell, the AI hype itself (and the blockchain and metaverse nonsense before it) relies heavily on this - never say specifics, always say “revolutionary technology, future, here to stay”, quickly run away if anyone tries to ask a question.

My feeling has gotten that I prefer the business executive empty vs the LLM empty, at least the first one usually expresses personality. It’s never entirely empty.

Although I never use LLMs for any serious purpose, I do sometimes give LLMs test questions in order to get firsthand experience on what their responses are like. This guide tracks quite well with what I see. The language is flowery and full of unnecessary metaphors, and the formatting has excessive bullet points, boldface, and emoji. (Seeing emoji in what is supposed to be a serious text really pisses me off for some reason.) When I read the text carefully, I can almost always find mistakes or severe omissions, even when the mistake could easily be remedied by searching the internet.

This is perfectly in line with the fact that LLMs do not have deep understanding, or the understanding is only in the mind of the user, such as with rubber duck debugging. I agree with the “Barnum-effect” comment (see this essay for what that refers to).

Doing a quick search, it hasn’t been posted here until now - thanks for dropping it.

In a similar vein, there’s a guide to recognising AI-extruded music on Newgrounds, written by two of the site’s Audio Moderators. This has been posted here before, but having every “slop tell guide” in one place is more convenient.

“This has been posted here before, but having every “slop tell guide” in one place is more convenient.”

Man, this is why human labour still reigns supreme. It’s such a small thing to consider the context in which these resources would be useful and to group together related resources as you have done here, but actions like this are how we can genuinely construct new meaning in the world. Even if we could completely eradicate hallucinations and nonspecific waffle in LLM output, they would still be woefully inept at this kind of task — they’re not good at making new stuff, for obvious reasons.

TL;DR: I appreciate you grouping these resources together for convenience. It’s the kind of mindful action that makes me think usefully about community building and positive online discourse.

It’s also the sort of thing that you wouldn’t actually think to ask for until it became quite hard to sort out. Creating this kind of list over time as good resources are found is much more practical and not the kind of thing would likely be automated.

Exactly! It’s basically a form of social informational infrastructure building

Some rando mathematician checks out some IQ related twins studies and find out (gasp) that Cremieux is a manipulative liar with an agenda

https://davidbessis.substack.com/p/twins-reared-apart-do-not-exist

Said author is sad that Paul Graham retweets the claim and does not draw the obvious conclusion that PG is just a bog-standard Silicon Valley VC pseudo-racist.

HN is not happy and a green username calling themselves “jagoff” leads the charge

Very good read actually.

Except, from the epilogue:

People are working to resolve [intelligence heritability issue] with new techniques and meta-arguments. As far as I understand, the frontline seems to be stabilizing around the 30-50% range. Sasha Gusev argues for the lower end of that band, but not everyone agrees.

The not-everyone-agrees link is to acx and siskind’s take on the matter, who unfortunately seems to continue to fly under the radar as a disingenuous eugenicist shitweasel with a long-term project of using his platform to sane-wash gutter racists who pretend at doing science.

Yeah, the substacker seems either naive or genuinely misinformed about Siskind’s ultimate agenda, but in their defense Scott is really really good at vomiting forth torrents of beige prose that obscure it.

Here’s the bottom line: I have no idea what motivated Cremieux to include Burt’s fraudulent data, but even without it his visual is highly misleading, if not manipulative

Well, the latter part of this sentence gives a hint at the actual reason.

And the first comment is by Hanania lol, trying to debunk the fraud allegations by saying that is just how things were done back then. While also not realizing he didnt understand the first part of the article. Amazing how these iq anon guys always react quick and to everything. Also was quite an issue on Reddit, where just a small dismissal of IQ could lead to huge (copy pasted) rambling defenses of IQ.

The author is also calling Richard out on his weird framing.

It’s fascinating to watch Hanania try and do politics in a comment space more focused on academic inquiry, and how silly he looks here. He can’t participate in this conversation without trying to make it about social interventions and class warfare (against the poor), even though I don’t know that Bessis would disagree that the thing social interventions can’t significantly increase the number of mathematical or scientific geniuses in a country (1). Instead, Hanania throws out a few brief, unsupported arguments, gets asked for clarification and validation, accuses everyone of being woke, and gets basically ignored as the conversation continues around him.

This feels like the kind of environment that Siskind and friends claim to be wanting to create, but it feels like they’re constitutionally incapable of actually doing the “ignore Nazis until they go away” part.

- From his other post linked in the thread he credits that level of aptitude to idiosyncratic ways of thinking that are neither genetically nor socially determined, but can be cultivated actively through various means. The reason that the average poor Indian boy doesn’t become Ramanujan is the same reason you or I or his own hypothetical twin brother didn’t; we’re not Ramanujan. This doesn’t mean that we can’t significantly improve our own ability to understand and use mathematical thinking.)

Honestly I’m kinda grateful for people who dig into and analyze the actual data in seeming ignorance of the political context of the people pushing the other side. It’s one thing to know that Jordan “Crimieux” Lasker and friends are out here doing Deutsch Genetik and another to have someone cleanly and clearly lay out exactly why they’re wrong, especially when they do the work of assembling a more realistic and useful story about the same studies.

Disney invests $1B into OpenAI with allowing access to all Disney characters

https://thewaltdisneycompany.com/disney-openai-sora-agreement/

So the code red is over now, I guess?!?!

(who ordered the code red.gif)

Somebody on bsky mentioned this is prob because Disney wants to be seen on the stockmarket as a tech company, and not a cartoon/theme park company.

(See how Tesla went from cars to self driving to now robots)

Remember the flood of offensive Pixaresque slop that happened in 2023? We’re gonna see something similar thanks to this deal for sure.

Of course Disney loves its cease and desists such as one to character.ai in October and one today to Google: https://variety.com/2025/digital/news/disney-google-ai-copyright-infringement-cease-and-desist-letter-1236606429/

Is this actually because of brand protection or just shareholder value? Racist, sexist, and all around abhorrent content is now easily generated with your favorite Disney owned characters just as long as you do it on the approved platform.

linux tech issue:

My desktop computer started with just windows on it. No issues. A while back I dual installed linux mint and windows, and pretty frequently when rebooting I’d have to deal with the computer insisting that I run fsck. I ended up switching back to just windows and thereafter had no issues.

This week I installed ubuntu as my sole OS and it’s not going very well. I’ve had to reinstall the OS a few times already. After the install at first everything is normal, but slowly I start getting hints of things going wrong. Certain programs will just not start, that kind of thing. Eventually I restart the computer and it will present me with a demand I run fsck. I do so, and now tons of system files are gone, the install is effectively dead, and I’m back to square zero.

I don’t understand what’s going on. I used the same media to install ubuntu on my laptop, and that works perfectly. I’ve had a version of this problem across two distros. And for some reason it doesn’t occur with windows! Complete mystery. Anyone have ideas?

I did it, I went and made a Official Public Comment IRL:

In UCLA’s Strategic Plan, Goal 1 is to “Deepen our engagement with Los Angeles” and Goal 5 is to “Become a more effective institution”. By engaging with Los Angeles businesses, UCLA can get both better terms, prices, and services, and support the local economy. Buy Local, Spend Local.

The federal government encourages this with Small Business Innovation Research and Small Business Technology Transfer grants, among other things. Furthermore, the State of California requires a portion of its spending go toward certified Small Businesses.

And yet, the University apparently awarded a contract reportedly worth hundreds of thousands to millions of dollars to OpenAI. I have not found any documentation of an open Request for Proposals or competitive process for that award.

My question is:

If there was an RFP, where was it publicly posted, and if there was no RFP, why not, and were Los Angeles vendors or small businesses evaluated as alternatives, as recommended by UC policy and state law?

Given the scale of this spending and the context of a budget crisis, transparency, compliance, and small-business participation are critical to our effectiveness and engagement.

I’m asking for clarity on how this decision was made, how it aligns with procurement guidelines and University goals, and how DTS plans to ensure that local and small businesses are meaningfully included moving forward.

Thank you.

The response has a high probability of being evasive bullshit, but will be worth archiving no matter what.

Get their asses

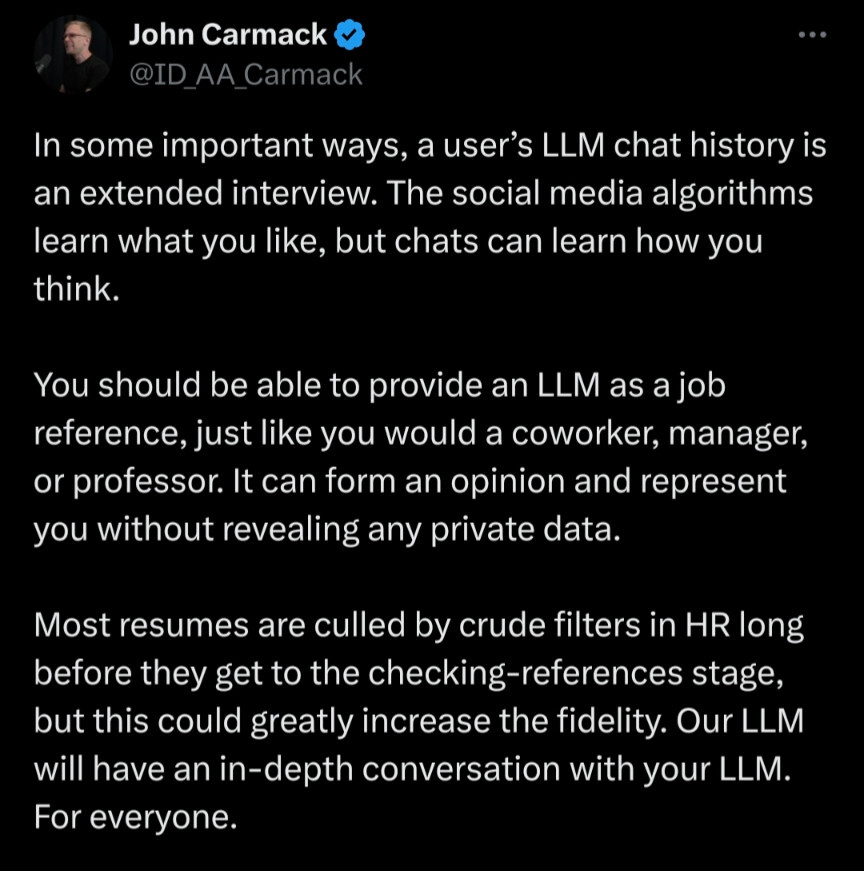

“you should be able to provide an LLM as a job reference”

source https://x.com/ID_AA_Carmack/status/1998753499002048589

What, that doesnt even make sense. I dont type what im thinking. (My only real usage of llms was trying to break them, so imagine the output of that. And you could then imagine what you think I was trying to attempt in messing with the system. But then you would do the work. Say you see mee send the same message twice. A logical conclusion would be that I tried to see if it gave different results if prompted the same way twice. However, it is more likely I just made a copy paste error and accidentally send the wrong text the second time. So the person reading the logs is doing a lot of work here). Ignoring all that he also didnt think of the next case: people using an llm to fake chatlogs to optimize being hired. Good way to hire a lot of North Koreans.

Sure John, let me know when you’ve got that set up. Something that retains my entire search/chat history, caches the responses as well, and pulls all that into the context window when it’s time to generate a job referral. Maybe you’ll be able to do something shotgunning together remaindered hardware this time next year? I’ll be waiting.

I’m legitimately disappointed in John Carmack here. He should be a good enough programmer to understand the limitations here, but I guess his business career has driven in a different direction.

Nah he has brainrot. He deadnamed and misgendered Rebecca Heinemann in his eulogy of her. Transphobia seems to really make people worse at thinking.

(Not a big shock considering how bad his answer was towards the ‘epic own’ of the person asking him if he would hire more women. (He said: “we are having a hard time hiring all the people that we want. It doesn’t matter what they look like.”, which sounds like a great own, but leaves one big question. Why aren’t you trying to educate more people in what you want then? What are you doing to fix that problem if it is such a big problem for you? (which then leads to, how will you ensure that this teaching system has women in it, etc)))

Carmack is a Gen-X Texan mostly known for using hardmode C to code an FPS. He’s so drenched in nerd testostorone I’d be surprised if he wasn’t background radiation level non-woke. Not saying he shouldn’t be as a human being, just that it would be an uphill battle for him.

At least we have Romero. Hoe had an edgelord phase, but only when younger and realized he fucked up.

Something intensely satisfying seeing this the same week as id software becoming a union shop.

This is offensively stupid lol

He’s become the Linus Pauling of video games.

definitely taking Vitamin L

regarding my take in previous stubsack, it does seem like crusoe intends to use these gas turbines as backup, and as of 31.07.2025 they had five turbines installed, who knows if connected, with obvious place for five more, with some pieces of them (smokestacks mostly) in place. it does make sense that as of october announcement, they had the first tranche of 10 installed or at least delivered. there’s no obvious prepared place where they intend to put next 19 of them, and that’s just stuff from GE, with more 21 coming from proenergy (maybe it’s for different site?). that said, it’s texas with famously reliable ercot, which means that on top using these for shortages, they might be paying market rates for electricity, which means that even with power available, they might turn turbines on when electricity gets ridiculously expensive. i’m sure that dispatchers will love some random fuckass telling them “hey, we’re disconnecting 250MW load in 15 minutes” when grid is already unstable due to being overloaded

New edition of AI Killed My Job, focusing on how AI’s fucked the copywriting industry.

A bit of uplifting news:

id software is now a union shop

the fifth episode of odium symposium, “4chan: the french connection” is now up. the first roughly half of the episode is a dive into sartre’s theory of antisemitism. then we apply his theory to the style guide of a nazi news site and the life of its founder, andrew anglin

favorite one so far! It’s like graduate-level 1-900 Hotdog

I need to quit clicking, my yt recommendations are so cursed now.

Love the Edgar Allen Poe thing at 3:30 though, wtf. Also where did he find the sephiroth at 4:19?

…so it’s not a Dr Who thing.

No, no. I’m not mad. Just… disappointed. Existentially speaking.

And here I was innocently thinking this was something about the excellent lesbian novel This Is How You Lose the Time War 😔

Edit your YouTube history to remove the cursed things and purify your algorithm.

Not in a position to watch that, but looking at the description, please tell me they are joking.

Not really.

Now I’m sad. And worried.

Boom Aerospace, not content with attempting the next Concorde, have gotten a little sidetracked. Funnily enough, they didn’t show any footage of the engine actually working. Surely it’s whisper quiet and won’t be a massive pain to live next to.

I would simply not name my airplane company “Boom”.

I regret I have but one upvote to give to this

The best I ever saw was a reply to a news story to the effect of, “If I were ever invited swimming in the Murderkill River, I would just not go.”

(This might be the original. Then again, it might not.)

okay, that’s the missing piece (? not the last): 1GW from GE, 1GW from proenergy, 1.2GW from this fuckass startup that nobody heard of, either missing 1.2GW of gas turbines or 1.2GW grid connection gets almost 4.5GW of power for crusoe

also you don’t need supersonic jet engines for that, these will be actively worse in reality for stationary power generation. they do that because you can haul them in a truck

Meanwhile China is adding power capacity at a wartime pace—coal, gas, nuclear, everything—while America struggles to get a single transmission line permitted.

thank Jack Welch for deindustrialization then

we built something no one else has built this century: a brand-new large engine core optimized for continuous, high‑temperature operation.

Lockheed Martin: am i a joke to you? (also, lots of manufacturers for proper CCGT turbines do just that)

read: “our product development is a black hole of cost, and our big investors are breathing down our necks to grab this cash while it’s there”

This is such a pivot, from “you can soon fly between capitals in half the time” to “this screaming jet engine will soon be disturbing your sleep 24/7”

It never had market. Wait 3h at airport just to get on a 3x, 5x more expensive flight so that instead of 5h you fly 3h - make it make sense. For people that don’t wait at airports anyway rental of demilitarised MIG-29s would make more sense

I assume they’re thinking about transcontinental flights most of all. Dubai->LA or whatever.

Honestly you could probably pitch that to the Musk/Thiel set pretty easily by playing up how masculine fighter pilots are and disconnecting but not removing the rear flight controls. Let them push buttons and feel cool.

If anyone can figure out how to get exmilitary F15 for joyrides it’s them. I guess Thiel would be fine without pretending as passenger, but Musk not, and learning this takes fuckton of effort, something he’s allergic to. Either one of them having supersonic chauffeur that has to go everywhere their jet does is exact kind of nonsense i’d expect in this timeline, and i don’t think that either is actually healthy enough to become a pilot in the first place

Larry Ellison has or had a working MiG.