Want to wade into the snowy surf of the abyss? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid: Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret.

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

The post Xitter web has spawned soo many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

(Credit and/or blame to David Gerard for starting this. Merry Christmas, happy Hannukah, and happy holidays in general!)

UIUC prof John Gallagher had a christmas post - Firm reading in an era of AI delirium - Could reading synthetic text be like eating ultra processed foods?

idea: end of year worst of ai awards. “the sloppies”

Slop can never win, even at being slop. Best they csn do is sloppy seconds.

“Top of the Slops”

On a related theme:

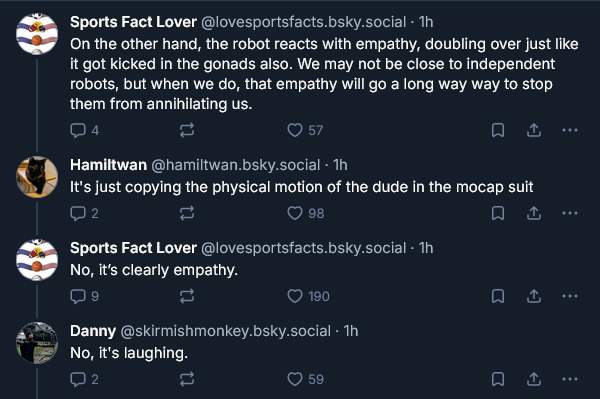

man wearing humanoid mocap suit kicks himself in the balls

https://bsky.app/profile/jjvincent.bsky.social/post/3mayddynhas2l

A pal: “I take back everything I ever said about humanoid robots being useless”

And… same

From the replies:

Love how confident everyone is “correcting” you. Chatgpt is literally my son’s therapist, of course cutting edge AI can empathize with a guy getting kicked in the balls lmao

I don’t want to live on this planet anymore.

this made my day, thx

I figure I might as well link the latest random positivity thread here in case anyone following the Stubsack had missed it.

In the future, I’m going to add “at scale” to the end of all my fortune cookies.

I am only mildly concerned that rapidly scaling this particular posting gimmick will cause our usually benevolent and forebearing mods to become fed up at scale

I’m a lot more afraid of the recursive effect of this joke. We could have at scale at scale.

Don’t worry, I’m sure Lemmy is perfectly capable of tail call optimization at scale

is that the superexponential growth that lw tried to warn us about?

Yes! at scale at scale at scale at scale

you will experience police brutality very soon, at scale

I will never forgive Rob Pike for the creation of the shittiest widely adopted programming language since C++, but I very much enjoy this recent thread where he rages about Anthropic.

for the creation of the shittiest widely adopted programming language since C++

Hey! JavaScript is objectively worse, thank you very much

I strongly disagree. I would much rather write a JavaScript or C++ application than ever touch Rob Pikes abortion of a language. Thankfully, other options are available.

Meh, at least go has a standard library that’s useful for… anything, really

Yes, I like a good standard library its probably one of the reasons why Java and C# are so popular with enterprise software development. They are both languages I consider better than go. You can write horrible code in both of them but unlike with go you aren’t forced to do so because the go clergy has decided that any kind of abstraction is heresy to the gospel of simplicity. Most go code I have seen is anything but simple because it forces you to read through a load of irrelevant implementation details just to find what you are actually looking for.

JavaScript isn’t even the worst Brendan Eich misdeed!

Okay but that’s more a Brensan Eichs anti-achievement than JavaScript being any good

Digressing: The irony is that it’s a language with one of the best standard libraries out there. Wanna run a http reverse proxy with TLS cross compiled for a different os? No problem!

Many times I used it only because of that despite it being a worse language.

Its standard crypto libraries are also second to none.

Impressive side fact: looks like they sent him an unsolicited email generated by a fucking llm? An impressive failure to read the fucking room.

Not like that exact bullshit has been attempted on the community at large by other shitheads last year, but then originality was never the clanker wankers’ strength

Other classic Rob Pike moments include spamming Usenet with Markov-chain bots and quoting the Bible to justify not highlighting Go syntax. Watching him have a Biblical meltdown over somebody emailing him generated text is incredibly funny in this context.

Pike’s blow-up has made it to the red site, and its brought promptfondlers out the woodwork.

(Skyview proxy, for anyone without a Bluesky account)

I didn’t realize this was part of the rationalist-originated “AI Village” project. See https://sage-future.org/ and https://theaidigest.org/village. Involved members and advisors include Eli Lifland and Daniel Kokotajlo of “AI 2027” infamy.

Remember how slatestarcodex argues that non-violence works better as a method of protest? Turns out the research pointing to that is a bit flawed: https://roarmag.org/essays/chenoweth-stephan-nonviolence-myth/

realising that preaching nonviolence is actually fascist propaganda is one of those consequences of getting radicalised/deprogramming from being a liberal. You can’t liberate the camps with a sit-in, for example.

nonviolent civil disobedience and direct action are just tactics that work in specific circumstances and can achieve specific goals. pretty much every violent movement for change was supported by non-violent movements. and non-violence often appears in a form that is unacceptable to the slatestarcodex contingent. Like Daniel Berrigan destroying Vietnam draft cards, or Catholic Workers attacking US warplanes with hammers, or Black Lives Matter activists blocking a highway or Meredith and Verity Burgmann running onto the pitch during a South African rugby match.

yes! To be clear, what I said was lacking nuance. What I meant was: preaching for only non-violence is fucked. And preaching for very limited forms of non-violence is fully fucked, for example, state/police sanctioned “peaceful” protests as the only form of protest

It’s that classic tweet: “this is bad for your cause” says a guy who hates your cause and hates you. The slatestarcodex squad didn’t believe there was any reason to protest but thought that if people must protest they should have the decency to do it in a way that didn’t cause them to be 5 minutes late on their way to lunch.

deleted by creator

Upvoted, but also consider: boycotts sometimes work. BDS is sufficiently effective that there are retaliatory laws against BDS.

Yes! It also highlights how willing the administration is to clamp down on even non-violence.

Thanks for posting. The author is provocative for sure, but I found he also wrote a similar polemic about veganism, kinda hard for me to situate it. Might fetch one of his volumes from the stacks during a slow week, probably would get my name put on a list though.

Yeah, dont see me linking to this post by Gelderloos as fully supporting his other stances, more that the whole way Scott says nonviolent movements are better isnt as supported as he says (and also shows Scott never did much activism). So more anti scott than pro Peter ;).

lol, Oliver Habryka at Lightcone is sending out begging emails, i found it in my spam folder

(This email is going out to approximately everyone who has ever had an account on LessWrong. Don’t worry, we will send an email like this at most once a year, and you can permanently unsubscribe from all LessWrong emails here)

declared Lightcone Enemy #1 thanks you for your attention in sending me this missive, Mr Habryka

In 2024, FTX sued us to claw back their donations, and around the same time Open Philanthropy’s biggest donor asked them to exit our funding area. We almost went bankrupt.

yes that’s because you first tried ignoring FTX instead of talking to them and cutting a deal

that second part means Dustin Moskovitz (the $ behind OpenPhil) is sick of Habryka’s shit too

If you want to learn more, I wrote a 13,000-word retrospective over on LessWrong.

no no that’s fine thanks

We need to raise $2M this year to continue our operations without major cuts, and at least $1.4M to avoid shutting down. We have so far raised ~$720k.

and you can’t even tap into Moskovitz any more? wow sucks dude. guess you’re just not that effective as altruism goes

And to everyone who donated last year: Thank you so much. I do think humanity’s future would be in a non-trivially worse position if we had shut down.

you run an overpriced web hosting company and run conferences for race scientists. my bayesian intuition tells me humanity will probably be fine, or perhaps better off.

“Would you like to know more?”

“Nah, I’m cool.”

More like

Would you like to know more

I mean, sure

Here’s a 13,000-word retrospective

Ah, nah fam

Ohoho, a beautiful lw begging post on this of all days?

you run an overpriced web hosting company and run conferences for race scientists. my bayesian intuition tells me humanity will probably be fine, or perhaps better off.

Someone in the comments calls them out: “if owning a $16 million conference centre is critical for the Movement, why did you tell us that you were not responsible for all the racist speakers at Manifest or Sam ‘AI-go-vroom’ Altman at another event because its just a space you rent out?”

OMG the enemies list has Sam Altman under “the people who I think have most actively tried to destroy it (LessWrong/the Rationalist movement)”

PauseAI Leader writes a hard take down on the EA movement: https://forum.effectivealtruism.org/posts/yoYPkFFx6qPmnGP5i/thoughts-on-my-relationship-to-ea-and-please-donate-to

They may be a doomer with some crazy beliefs about AI, but they’ve accurately noted EA is pretty firmly captured by Anthropic and the LLM companies and can’t effectively advocate against them. And they accurately call out the false balanced style and unevenly enforced tone/decorum norms that stifle the EA and lesswrong forums. Some choice quotes:

I think, if it survives at all, EA will eventually split into pro-AI industry, who basically become openly bad under the figleaf of Abundance or Singulatarianism, and anti-AI industry, which will be majority advocacy of the type we’re pioneering at PauseAI. I think the only meaningful technical safety work is going to come after capabilities are paused, with actual external regulatory power. The current narrative (that, for example, Anthropic wishes it didn’t have to build) is riddled with holes and it will snap. I wish I could make you see this, because it seems like you should care, but you’re actually the hardest people to convince because you’re the most invested in the broken narrative.

I don’t think talking with you on this forum with your abstruse culture and rules is the way to bring EA’s heart back to the right place

You’ve lost the plot, you’re tedious to deal with, and the ROI on talking to you just isn’t there.

I think you’re using specific demands for rigor (rigor feels virtuous!) to avoid thinking about whether Pause is the right option for yourselves.

Case in point: EAs wouldn’t come to protests, then they pointed to my protests being small to dismiss Pause as a policy or messaging strategy!

The author doesn’t really acknowledge how the problems were always there from the very founding of EA, but at least they see the problems as they are now. But if they succeeded, maybe they would help slow the waves of slop and capital replacing workers with non-functioning LLM agents, so I wish them the best.

I can imagine Peter Singer’s short-termist EA splitting off from the longtermists, but I have a hard time imagining any part of the EA movement aligning itself against global capitalism and American hegemony. If you are in to that there are movements which have better parties and more of a gender balance than Effective Altruism.

I totally agree. The linked PauseAI leader still doesn’t realize the full extent of the problem, but I’m kind of hopeful they may eventually figure it out. I think the ability to simply say this is bullshit (about in group stuff) is a skill almost no lesswrongers and few EAs have.

Max Read argues that LessWrongers and longtermists are specifically trained to believe “I can’t call BS, I must listen to the full recruiting pitch then compose a reasoned response of at least 5,000 words or submit.”

odium symposium christmas bonus episode: we watched and reviewed Sean Hannity’s straight-to-Rumble 2023 Christmas comedy “Jingle Smells.”

I’ve subscribed, it scratches the itch between waiting for episodes of if books could kill!

AI researchers are rapidly embracing AI reviews, with the new Stanford Agentic Reviewer. Surely nothing could possibly go wrong!

Here’s the “tech overview” for their website.

Our agentic reviewer provides rapid feedback to researchers on their work to help them to rapidly iterate and improve their research.

The inspiration for this project was a conversation that one of us had with a student (not from Stanford) that had their research paper rejected 6 times over 3 years. They got a round of feedback roughly every 6 months from the peer review process, and this commentary formed the basis for their next round of revisions. The 6 month iteration cycle was painfully slow, and the noisy reviews — which were more focused on judging a paper’s worth than providing constructive feedback — gave only a weak signal for where to go next.

How is it, when people try to argue about the magical benefits of AI on a task, it always comes down to arguing “well actually, humans suck at the task too! Look, humans make mistakes!” That seems to be the only way they can justify the fact that AI sucks. At least it spews garbage fast!

(Also, this is a little mean, but if someone’s paper got rejected 6 times in a row, perhaps it’s time to throw in the towel, accept that the project was never that good in the first place, and try better ideas. Not every idea works out, especially in research.)

When modified to output a 1-10 score by training to mimic ICLR 2025 reviews (which are public), we found that the Spearman correlation (higher is better) between one human reviewer and another is 0.41, whereas the correlation between AI and one human reviewer is 0.42. This suggests the agentic reviewer is approaching human-level performance.

Actually, now all my concerns are now completely gone. They found that one number is bigger than another number, so I take back all of my counterarguments. I now have full faith that this is going to work out.

Reviews are AI generated, and may contain errors.

We had built this for researchers seeking feedback on their work. If you are a reviewer for a conference, we discourage using this in any way that violates the policies of that conference.

Of course, we need the mandatory disclaimers that will definitely be enforced. No reviewer will ever be a lazy bum and use this AI for their actual conference reviews.

the noisy reviews — which were more focused on judging a paper’s worth than providing constructive feedback

dafuq?

Yeah, it’s not like reviewers can just write “This paper is utter trash. Score: 2” unless ML is somehow an even worse field than I previously thought.

They referenced someone who had a paper get rejected from conferences six times, which to me is an indication that their idea just isn’t that good. I don’t mean this as a personal attack; everyone has bad ideas. It’s just that at some point, you just have to cut your losses with a bad idea and instead use your time to develop better ideas.

So I am suspicious that when they say “constructive feedback”, they don’t mean “how do I make this idea good” but instead “what are the magic words that will get my paper accepted into a conference”. ML has become a cutthroat publish-or-perish field, after all. It certainly won’t help that LLMs are effectively trained to glaze the user at all times.

we found that the Spearman correlation (higher is better) between one human reviewer and another is 0.41

This stinks to high heaven, why would you want these to be more highly correlated? There’s a reason you assign multiple reviewers, preferably with slightly different backgrounds, to a single paper. Reviews are obviously subjective! There’s going to be some consensus (especially with very bad papers; really bad papers are always almost universally lowly reviewed, because you know, they suck), but whether a particular reviewer likes what you did and how you presented it is a bit of a lottery.

Also the worth of a review is much more than a 1-10 score, it should contain detailed justification for the reviewers decision so that a meta-reviewer can then look and pinpoint relevant feedback, or even decide that a low-scoring paper is worthwhile and can be published after small changes. All of this is an abstraction, of course a slightly flawed one, but of humans talking to each other. Show your paper to 3 people you’ll get 4 different impressions. This is not a bug!

Problem: Reviewers do not provide constructive criticism or at least reasons for paper to be rejected. Solution: Fake it with a clanker.

Genius.

To be fair, currently conference reviewers frequently do not even do reviewing so this might be a step up.

terrible state of house CO detectors is not an excuse for putting a CO generator in the living room.

me, thinking it’s a waste to not smoke indoors because my landlord won’t fix the CO detectors: oh

(jk I don’t smoke)

Going from lazy, sloppy human reviews to absolutely no humans is still a step down. LLMs don’t have the capability to generalize outside the (admittedly enormous) training dataset they have, so cutting edge research is one of the worse use cases for them.

An LLM is better than literally nothing. There have been scandals of papers being basically copies of previous papers at conferences and that was only caught because some random online read the papers.

Nobody is reading papers. Universities are a clout machine.

Alice: what is

2 + 2?LLM:

random.random() + random.random()Alice:

1.2199404515268157is better than nothing, i guessyou’ve got so much in common with an LLM, since you also seem to be spewing absolute bullshit to an audience that doesn’t like you

What value are you imagining the LLM providing or adding? They don’t have a rich internal model of the scientific field to provide an evaluation of novelty or contribution to the field. They could maybe spot some spelling or grammar errors, but so can more reliable algorithms. I don’t think they could accurately spot if a paper is basically a copy or redundant, even if given RAG on all the past papers submitted to the conference. A paper carefully building on a previous paper vs. a paper blindly copying a previous paper would look about the same to an LLM.

Your premise is total bullshit. That being said, I’d prefer a world where nobody reads papers and journals stop existing to a world where we are boiling the oceans to rubber-stamp papers.

Nobody is reading papers. Universities are a clout machine.

Sokal, you should log off

Funny story: Just yesterday, I wrote to a journal editor pointing out that a term coined in a paper they had just printed had actually been used with the same meaning 20 years ago. They wrote back to say that I was the second person to point this out and that an erratum would be issued.

Starting this Stubsack off, here’s Baldur Bjarnason lamenting how tech as a community has gone down the shitter.

Some of them have to use it at work and are just making the most of it, but even those end up recruited into tech’s latest glorious vision of the future.

Ah fuck. This is the worst part, for me.

That’s a bummer of a post but oddly appropriate during the darkest season in the northern hemisphere in a real bummer of a year. Kind of echoes the “Stop talking to each other and start buying things!” post from a few years back though I forget where that one came from.

I think I read that post and thought it was incredibly naive, on the level of “why does the barkeep ask if I want a drink?” or “why does the pretty woman with a nice smile want me to pay for the VIP lounge?” Cheap clanky services like forums and mailing lists and Wordpress blogs can be maintained by one person or a small club but if you want something big, smooth, and high-bandwidth someone is paying real money and wants something back. Examples in the original post included geocities, collegeclub.com, MySpace, Friendster, Livejournal, Tumblr, Twitter and those were all big business which made big investments and hoped to make a profit.

Anyone who has helped run a medium-sized club or a Fedi server has faced "we are growing. Input of resources from new members is not matching the growth in costs and hassle. How do we explain to the new members what we need to keep going and get them to follow up? "

There is a whole argument that VC-backed for-profit corporation are a bad model for hosting online communities but even nonproffits or Internet celebrities with active comments face the issue “this is growing, it requires real server expenses and professional IT support and serious moderation. Where are those coming from? Our user base is used to someone else invisibly providing that.”

if you have a point, make it. nihilism is cheap.

Its not nihilism to observe that Reddit is bigger and fancier than this Lemmy server because Reddit is a giant business that hopes to make money from users. Online we have a choice between relatively small, janky services on the Internet (where we often have to pay money or help with systems administration and moderation) or big flashy services on corporate social media where the corporation handles all the details for us but spies on us and propagandizes us. We can chose (remember the existentialists?) but each comes with its own hassles and responsibilities.

And nobody, whether a giant corporation or a celebrity, is morally obliged to keep providing tech support and moderation and funding for a community just because it formed on its site. I have been involved in groups or companies which said “we can’t keep running this online community, we will scale it back / pass it to some of our users and let them move it to their own domain and have a go at running it” and they were right to make that choice. Before Musk Twitter spent around $5 billion/year and I don’t think donations or subscriptions were ever going to pay for that (the Wikimedia Foundation raises hundreds of millions a year, and many more people used Wikipedia than used Twitter).

I kinda half agree, but I’m going to push back on at least one point. Originally most of reddit’s moderation was provided by unpaid volunteers, with paid admins only acting as a last resort. I think this is probably still true even after they purged a bunch of mods that were mad Reddit was being enshittifyied. And the official paid admins were notoriously slow at purging some really blatantly over the line content, like the jailbait subreddit or the original donald trump subreddit. So the argument is that Reddit benefited and still benefits heavily from that free moderation and the content itself generated and provided by users is valuable, so acting like all reddit users are simply entitled free riders isn’t true.

A point that Maciej Ceglowski among others have made is that the VC model traps services into “spend big” until they run out of money or enshitiffy, and that services like Dreamwidth, Ghost, and Signal offer ‘social-media-like’ experiences on a much smaller budget while earning modest profits or paying for themselves. But Dreamwidth, Ghost, and Signal are never going to have the marketing budget of services funded by someone else’s money, or be able to provide so many professional services gratis. So you have to chose: threadbare security on the open web, or jumping from corporate social media to corporate social media amidst bright lights and loudspeakers telling you what site is the NEW THING.

It sounds like part, maybe even most, of the problem is self inflicted by the VC model traps and the VCs? I say we keep blocking ads and migrating platforms until VCs learn not to fund stuff with the premise of ‘provide a decent service until we’ve captured enough users, then get really shitty’.

In an ideal world, reddit communities could have moved onto a self-hosted or nonprofit service like LiveJournal became Dreamwidth. But it was not a surprise that a money-burning for-profit social media service would eventually try to shake down the users, which is why my Reddit history is a few dozen Old!SneerClub posts while my history on the Internet is much more extensive. The same thing happened with ‘free’ PhpBB services and mailing list services like Yahoo! Groups, either they put in more ads or they shut down the free version.

you’re either not understanding or misrepresenting valente’s points in order to make yours: that we can’t have nice things and shouldn’t either want or expect them, because it’s unreasonable. nothing can change, nothing good can be had, nothing good can be achieved. hence: nihilism.

Not at all. I am saying that we cannot all have our own digital Versailles and servants forever after. We can have our own digital living room and kitchen and take turns hosting friends there, but we have to do the work, and it will never be big or glamorous. Valente could have said “big social media sucks but small open web things are great” but instead she wants the benefits of big corporate services without the drawbacks.

I have been an open web person for decades. There is lots of space there to explore. But I do not believe that we will ever find a giant corporation which borrows money from LutherCorp and Bank of Mordor, builds a giant ‘free’ service with a slick design, and never runs out of money or starts stuffing itself with ads.

Lot’s of words to say “but what were the users wearing?”

If you can’t sustain your business model without turning it into a predatory hellscape then you have failed and should perish. Like I’m sorry, but if a big social media service that actually serves its users is financially infeasible, then big social media services should not exist. Plain and simple.

Yes, all of these services should perish. But if you repeatedly chose to build community on a for-profit service that is bleeding money, you can’t complain that it eventually runs out and either goes bankrupt or is restructured to make more money. Valente wants the perks of a site that spends a lot of money, but democratic government and no annoying ads or tracking.

Again, there are only two sensible ways out:

- Stop doing that. We really don’t need Twitter to exist.

- We agree as a society that we do need Twitter to exist and it is a public good, so we create it as any other piece of important public infrastructure and use taxes to finance it.

I agree that “publicly owned, publicly funded” would be a fine option (but which public? Is twitter global or for the USA or for California?) Good luck making the case for $5 billion/year new tax revenues to fund that new expense. Until then, there are small janky services on the Fediverse which rely on donations and volunteer work.

take it out of the military budget, should be less than a rounding error

Sean Munger, my favorite history YouTuber, has released a 3-hour long video on technology cultists from railroads all the way to LLMs. I have not watched this yet but it is probably full of delicious sneers.

Gemini helps a guy increase his google cloud bill 18x

The commit message claimed “60% cost savings.”

Arnie voice: “You know when I said I would save you money? I lied”

Its getting hard to track all these AI wins. Is there a web3isgoinggreat.com for AI by now?

this was literally our elevator pitch for Pivot to AI

I like it but I sorely miss the Grift Counter™.

> What guardrails work that don’t depend on constant manual billing checks?

Have you considered not blindly trusting the god damn confabulation machine?

> AI is going to democratize the way people don’t know what they’re doing

Ok, sometimes you do got to hand it to them

oof ow my bones why do my bones hurt?

–a man sipping a bottle labeled “bone hurting juice”

oof ow my bones why do my bones hurt?

–a man sipping from a bottle labeled “bone hurting juice”

I posted about Eliezer hating on OpenPhil for having too long AGI timelines last week. He has continued to rage in the comments and replies to his call out post. It turns out, he also hates AI 2027!

I looked at “AI 2027” as a title and shook my head about how that was sacrificing credibility come 2027 on the altar of pretending to be a prophet and picking up some short-term gains at the expense of more cooperative actors. I didn’t bother pushing back because I didn’t expect that to have any effect. I have been yelling at people to shut up about trading their stupid little timelines as if they were astrological signs for as long as that’s been a practice (it has now been replaced by trading made-up numbers for p(doom)).

When we say it, we are sneering, but when Eliezer calls them stupid little timelines and compares them to astrological signs it is a top quality lesswrong comment! Also a reminder for everyone that I don’t think we need: Eliezer is a major contributor to the rationalist attitude of venerating super-forecasters and super-predictors and promoting the idea that rational smart well informed people should be able to put together super accurate predictions!

So to recap: long timelines are bad and mean you are a stuffy bureaucracy obsessed with credibility, but short timelines are bad also and going to expend the doomer’s crediblity, you should clearly just agree with Eliezer’s views, which don’t include any hard timelines or P(doom)s! (As cringey as they are, at least they are committing to predictions in a way that can be falsified.)

Also, the mention about sacrificing credibility make me think Eliezer is intentionally willfully playing the game of avoiding hard predictions to keep the grift going (as opposed to self-deluding about reasons not to explain a hard timeline or at least put out some firm P()s ).

Eliezer is a major contributor to the rationalist attitude of venerating super-forecasters and super-predictors and promoting the idea that rational smart well informed people should be able to put together super accurate predictions!

This is a necessary component of his imagined AGI monster. Good thing it’s bullshit.

Super-prediction is difficult, especially about the super-future. —old Danish proverb

And looking that up led me to this passage from Bertrand Russell:

The more tired a man becomes, the more impossible he finds it to stop. One of the symptoms of approaching nervous breakdown is the belief that one’s work is terribly important and that to take a holiday would bring all kinds of disaster. If I were a medical man, I should prescribe a holiday to any patient who considered his work important.

Watching this guy fall apart as he’s been left behind has sure been something.

it has now been replaced by trading made-up numbers for p(doom)

Was he wearing a hot-dog costume while typing this wtf

I really don’t know how he can fail to see the irony or hypocrisy at complaining about people trading made up probabilities, but apparently he has had that complaint about P(doom) for a while. Maybe he failed to write a call out post about it because any criticism against P(doom) could also be leveled against the entire rationalist project of trying to assign probabilities to everything with poor justification.

I believe in you Eliezer! You’re starting to recognise that the AI doom stuff is boring nonsense! I’m cheering for you to dig yourself out of the philosophical hole you’ve made!

We have a new odium symposium episode. This week we talk about Ayn Rand, who turned out to be much much more loathsome than i expected.

available everywhere (see www.odiumsymposium.com). patreon episode link: https://www.patreon.com/posts/haters-v-ayn-146272391

Catching up and I want to leave a Gödel comment. First, correct usage of Gödel’s Incompleteness! Indeed, we can’t write down a finite set of rules that tells us what is true about the world; we can’t even do it for natural numbers, which is Tarski’s Undefinability. These are all instances of the same theorem, Lawvere’s Fixed-Point. Cantor’s theorem is another instance of Lawvere’s theorem too. In my framing, previously, on Awful, postmodernism in mathematics was a movement from 1880 to 1970 characterized by finding individual instances of Lawvere’s theorem. This all deeply undermines Rand’s Objectivism by showing that either it must be uselessly simple and unable to deal with real-world scenarios or it must be so complex that it must have incompleteness and paradoxes that cannot be mechanically resolved.

I’m pretty sure that Atlas Shrugged is actually just cursed and nobody has ever finished it. John Galt’s speech gets two pages longer whenever you finish one.

And I think the challenge with engaging with Rand as a fiction author is that, put bluntly, she is bad at writing fiction. The characters and their world don’t make any sense outside of the allegorical role they play in her moral and political philosophy, which means you’re not so much reading a good story with thought behind it as much as it’s a philosophical treatise that happens in the form of dialogue. It’s a story in the same way that Plato’s Republic is a story, but the Republic can actually benefit from understanding the context of the different speakers at least as a historical text.