Want to wade into the snowy surf of the abyss? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid: Welcome to the Stubsack, your first port of call for learning fresh Awful you’ll near-instantly regret.

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

The post Xitter web has spawned soo many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

(December’s finally arrived, and the run-up to Christmas has begun. Credit and/or blame to David Gerard for starting this.)

github produced their

annual insights into the state of open source and public software projectsbarrel of marketing slop, and it’s as self-congratulatory as unreadable and completely opaque.tl;dr: AI! Agents! AI! Agents! AI! Agents! AI…

Just one thing that caught my attention:

AI code review helps developers. We … found that 72.6% of developers who use Copilot code review said it improved their effectiveness.

Only 72.6%? So why the heck are the other almost 30% of devs using it? For funsies? They don’t say.

You’d think due to self selection effects most people who wouldn’t find using Copilot effective wouldn’t use it.

The only way that number makes sense to me is if people were force to use Copilot and… no, wait, that checks out.

something i was thinking about yesterday: so many people i

respectused to respect have admitted to using llms as a search engine. even after i explain the seven problems with using a chatbot this way:- wrong tool for the job

- bad tool

- are you fucking serious?

- environmental impact

- ethics of how the data was gathered/curated to generate[1] the model

- privacy policy of these companies is a nightmare

- seriously what is wrong with you

they continue to do it. the ease of use, together with the valid syntax output by the llm, seem to short-circuit something in the end-user’s brain.

anyway, in the same way that some vibe-coded bullshit will end up exploding down the line, i wonder whether the use of llms as a search engine is going to have some similar unintended consequences — “oh, yeah, sorry boss, the ai told me that mr. robot was pretty accurate, idk why all of our secrets got leaked. i watched the entire series.”

additionally, i wonder about the timing. will we see sporadic incidents of shit exploding, or will there be a cascade of chickens coming home to roost?

they call this “training” but i try to avoid anthropomorphising chatbots ↩︎

Is there any search engine that isn’t pushing an “AI mode” of sorts? Some are more sneaky or give option to “opt out” like duckduckgo, but this all feels temporary until it is the only option.

I have found it strange how many people will say “I asked chatgpt” with the same normalcy as “googling” was.

Sadly web search, and the web in general, have enshittified so much that asking ChatGPT can be a much more reliable and quicker way to find information. I don’t excuse it for anything that you could easily find on wikipedia, but it’s useful for queries such as “what’s the name of that free indie game from the 00s that was just a boss rush no you fucking idiot not any of this shit it was a game maker thing with retro pixel style or whatever ugh” where web search is utterly useless. It’s a frustrating situation, because of course in an ideal world chatbots don’t exist and information on the web is not drowned in a sea of predatory bullshit, reliable web indexes and directories exist and you can easily ask other people on non-predatory platforms. In the meanwhile I don’t want to blame the average (non-tech-evangelist, non-responsibility-having) user for being funnelled into this crap. At worst they’re victims like all of us.

Oh yeah and the game’s Banana Nababa by the way.

“they call this “training” but i try to avoid anthropomorphising chatbots”

You can train animals, you can train a plant, you can train your hair. So it’s not really anthropomorphising.

At work, i watched my boss google something, see the “ai overview” and then say “who knows if this is right”, and then read it and then close the tab.

It made me think about how this is how like a rumor or something happens. Even in a good case, they read the text with some scepticism but then 2 days later they forgot where they heard it and so they say they think whatever it was is right.

Yes i know the kid in the omelas hole gets tortured each time i use the woe engine to generate an email. Is that so wrong?

Nicky Case posted the ending to her AI Safety for Fleshy Humans series and gosh I miss when I thought she was cool 🫠

linking to Rob Miles and an EA longtermist fund at the end of the outro really gives the game away lol

(interestingly this also involves Hack Club, which is the org that Ghostty is now part of from @[email protected]’s post earlier today? I wonder what’s up with them)

I am not familiar with Case or her work, but based on my shallow research, she’s part of the cohort of internet stuff-communicators including people like Vi Hart or the Oatmeal etc. Not casting aspersions on anyone here, but I’d be interested to know who amongst them have revealed their chud nature and who has remained pristine (…for now).

Last I checked Vi Hart still seems like a good egg (idk if my closeted teenaged self hiding in the recesses of my brain could take it otherwise 😭)

I’m a bit more familiar with Case - I mainly knew her for making web games like :the game: trilogy, Nothing to Hide and We Become What We Behold.

Fucking sucks to see she’s bought into this shit.

It’s even way too long a read and full of footnotes, as is tradition!

Heck it’s even long enough that I just came across the second flashcard break, and I’m 1/4th of the way through part 1…

I was annoyed at the start lumping in ai ethics with all the ai doom stuff, and then basically stopped reading.

Do think it is interesting that Miles is part of a collaborative yt tech channel and they recently released an ai slop episode. (Which I have not watched yet either, things need to slow down for a bit please).

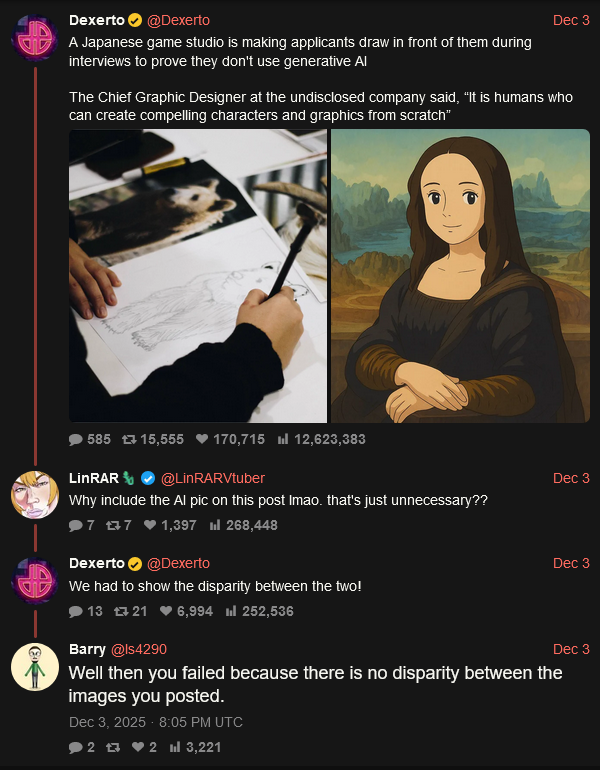

Dexerto has reported on an unnamed Japanese game studio weeding out promptfondlers (by having applicants draw something in-person during interviews).

Unsurprisingly, the replies have become a promptfondler shooting gallery. Personal “favourite” goes to the guy who casually admits he can’t tell art from slop:

Pam Samuelson (UC Berkeley) has a nice explainer on AI copyright - https://cacm.acm.org/opinion/does-using-in-copyright-works-as-training-data-infringe/

This looks like it’s relevant to our interests

Hayek’s Bastards: Race, Gold, IQ, and the Capitalism of the Far Right by Quinn Slobodian

https://press.princeton.edu/books/hardcover/9781890951917/hayeks-bastards

Always thought she should have stuck to acting.

(I know, Hayek just always reminds me of how people put his quotes over Hayeks image, and people just get really mad at her, and not at him. Always wonder if people would have been just as mad if it was Friedrichs image and not Salmas due to the sexism aspect).

Cory Doctorow has plugged this on his blog, which is usually a good signal for me.

He came by campus last spring and did a reading, very solid and surprisingly well-attended talk.

u wot m8???!!

Show HN: I analyzed 8k near-death experiences with AI and made them listenable

psychic damage warning, obviously

Hat tip to the person who wants to try and include DMT and other hallucinogens and psychedelics. How many of these experiences are gonna be tagged “Machine Elves” by the time anyone starts asking wtf we’re doing here?

A second post on software project management in a week, this one from deadsimpletech: failed software projects are strategic failures.

A window into another it disaster I wasn’t aware of, but clearly there is no shortage of those. An australian one this time.

And of course, without having at least some of that expertise in-house, they found themselves completely unable to identify that Accenture was either incompetent, actively gouging them or both.

(spoiler alert, it was both)

Interesting mention of clausewitz in the context of management, which gives me pause a bit because techbros famously love the “art of war”, probably because sun tzu was patiently explaining obvious things to idiots and that works well on them. “On war” might be a better text, I guess.

I associate Clausewitz (and especially John Boyd) references more with a Palantir / Stratfor / Booz / LE-MIC-consulting class compared to your typical bay area YC techbro in the US, and a very different crowd over in AU / NZ where grognards probably outnumber the actual military. LWers never bring up Clausewitz either but love Sun Tzu. But as far as software strategy posts go, I’d much rather read a Clausewitz tie-in than, say, Mythical Man Month or Agile anything.

Much of the content of mythical man month is still depressingly relevant, especially in conjunction with brooks’ later stuff like no silver bullets. A lot of senior tech management either never read it, or read it so long ago that they forgot the relevant points beyond the title.

It’s interesting that clausewitz doesn’t appear in lw discussions. That seems like a big point in favour of his writing.

If you liked Brooks, you might give Gerald Weinberg a try. A bit more folksy / less corporate.

More grok shit: https://futurism.com/artificial-intelligence/grok-doxxing it in contrast to most other models, is very good at doxing people.

Amazing how everything Musk makes is the worst in class (and somehow the Rationalists think he will be their saviour (that is because he is a eugenicist)).

(thinks) groxxing

the base use for LLMs is gonna be hypertargetted advertising, malware, political propaganda etc

well the base case for LLMs is that, right now

the privacy nerds won’t know what hit them

saw this elsewhere. the account itself appears to be a luckey stan account, but the next

There’s more crust than air or sea or land… so a vehicle that moves through the crust of the earth is going to be a huge deal

I have built working prototypes of this

so are we talking mining, or The Core (2003)? it feels like he’s trying to pitch it as though it’s Tiberian Sun style subterrean APC, but I can’t be sure whether I’m reading into it

hey crazy idea, what if we made a vehicle that moves across the habitable surface of the crust instead

it would need to be organized somehow, make it big and electric to be efficient. people go to the same places every day so you can just put a durable track of some kind in right place,

Don’t forget he made a prototype of a gaming helmet with shotgun shells aimed to kill the wearer.

I’m thinking nydus worms from SC2 or the GLA tunnel system in C&C generals.

right? like, is felon finally getting competition for unhinged billionaire gamerposting?

announcing “leeroy jenkins” mode for grok where it just posts your tweet drafts and you can’t delete them

A lobster wonders why the news that a centi-millionaire amateur jet pilot has decided to offload the cost of developing his pet terminal software onto peons begging for contributions has almost 100 upvotes, and is absolutely savaged for being rude to their betters

https://lobste.rs/s/dxqyh4/ghostty_is_now_non_profit#c_b0yttk

enter hashimoto. cringe intensifies

(note: out-of-order to linked post for comment cohesion)

Terminals are an invisible technology to most

what a fucking sentence

…that are hyper present in the everyday life of many in the tech industry.

hyper? like this?

But the terminal itself is boring, the real impact of Ghostty is going to be in libghostty and making all of this completely available for many use cases. My hope is that through building a broadly adopted shared underlayer of terminals around the industry we can do some really interesting things.

oh good so the rentier bridgetroll wants to do just a monopoly play? that’s fine I’m sure. note: I don’t think there’s a more charitable reading of this. those shared underlayers already exist, in the form of decades of protocol and other development. many of them suck and I agree about trying to do better, but I (rather strongly) suspect hashi and I have very different ideas of what that looks like

I’ve already addressed the belittling of the project I really find useful and care about. So let’s just move on to the financial class.

Regardless of my financial ability to support this project, any project that financially survives (for or non-profit) at the whims of a single donor is an unhealthy project

“uwu, think of the poor projects. yes sure I could throw $20m at this in some kind of funny trust and have it live forever but that wouldn’t allow me to evade the point so much!”

I paid a 9-figure tax bill and also donated over 5% of my other stuff to charity this year

“I’m not as bad as the other billionaires I promise”

I’m too fucking old to care about hipster terminals, so I had no idea ghostty was started by a (former) billionaire. If forced to choose a new terminal I will certainly take this fact into consideration.

all things aside, is current ghostty any good, or still an

audiophileconsolephile-ware?i’m generally reluctant to try something which reeks of intensive self-promotion, but few months ago i decided to finally see what’s the hype about, and, well, it’s a terminal emulator.

wezterm does much more, and with a much cleaner ui, and it’s programmable, and the author doesn’t remind me that hashicorp is a thing that exists.

been giving it a whirl for a few weeks now and greatly enjoying it. one or two minor snags I still need to solve (such as

back-kill-wordnot working in search, just haven’t looked into it yet) but otherwise fairly pleasedand I no longer have to see the stupid iterm2 update nag every damn day

config.keysand hot reload are nicehappy to hear it!

one or two minor snags I still need to solve (such as back-kill-word not working in search, just haven’t looked into it yet)

there’s a separate key config for search mode which may give you what you need.

second person today I saw mentioning wezterm, guess I should look sometime for familiarity

ghosTTy is the username of a schizoposter on Something Awful who only shows up to post bitcoin price charts and get mocked into oblivion. I wonder if there’s any connection?

I took psychic damage by scrolling up and seeing promptsimon posting a real doozie:

I have been enjoying hitting refresh on https://fuckthisurl/froztbyte-scrubbed-it-intentionally throughout today and watching the number grow - it’s nice to see a clear example of people donating to a new non-profit open source project.

“oooh! look at the vanity project go! weeeee, isn’t having a famous face attached to it fun?” with exactly no reflection on the fucking daunting state of open source funding in multiple other domains and projects

bring back rich people rolling their own submarines and getting crushed to death in the bathyal zone

there’s some more cursor fun too. no sneers yet, I’ve barely started reading

oh I just saw this is almost a month old! still funny tho

(and I’ve been busy af afkspace)

saw it via jonny who did do some notes

Another day, another instance of rationalists struggling to comprehend how they’ve been played by the LLM companies: https://www.lesswrong.com/posts/5aKRshJzhojqfbRyo/unless-its-governance-changes-anthropic-is-untrustworthy

A very long, detailed post, elaborating very extensively the many ways Anthropic has played the AI doomers, promising AI safety but behaving like all the other frontier LLM companies, including blocking any and all regulation. The top responses are all tone policing and such denying it in a half-assed way that doesn’t really engage with the fact the Anthropic has lied and broken “AI safety commitments” to rationalist/lesswrongers/EA shamelessly and repeatedly:

I feel confused about how to engage with this post. I agree that there’s a bunch of evidence here that Anthropic has done various shady things, which I do think should be collected in one place. On the other hand, I keep seeing aggressive critiques from Mikhail that I think are low-quality (more context below), and I expect that a bunch of this post is “spun” in uncharitable ways.

I think it’s sort of a type error to refer to Anthropic as something that one could trust or not. Anthropic is a company which has a bunch of executives, employees, board members, LTBT members, external contractors, investors, etc, all of whom have influence over different things the company does.

I would find this all hilarious, except a lot of the regulation and some of the “AI safety commitments” would also address real ethical concerns.

If rationalists could benefit from just one piece of advice, it would be: actions speak louder than words. Right now, I don’t think they understand that, given their penchant for 10k word blog posts.

One non-AI example of this is the most expensive fireworks show in history, I mean, the SpaceX Starship program. So far, they have had 11 or 12 test flights (I don’t care to count the exact number by this point), and not a single one of them has delivered anything into orbit. Fans generally tend to cling on to a few parlor tricks like the “chopstick” stuff. They seem to have forgotten that their goal was to land people on the moon. This goal had already been accomplished over 50 years ago with the 11th flight of the Apollo program.

I saw this coming from their very first Starship test flight. They destroyed the launchpad as soon as the rocket lifted off, with massive chunks of concrete flying hundreds of feet into the air. The rocket itself lost control and exploded 4 minutes later. But by far the most damning part was when the camera cut to the SpaceX employees wildly cheering. Later on there were countless spin articles about how this test flight was successful because they collected so much data.

I chose to believe the evidence in front of my eyes over the talking points about how SpaceX was decades ahead of everyone else, SpaceX is a leader in cheap reusable spacecraft, iterative development is great, etc. Now, I choose to look at the actions of the AI companies, and I can easily see that they do not have any ethics. Meanwhile, the rationalists are hypnotized by the Anthropic critihype blog posts about how their AI is dangerous.

I chose to believe the evidence in front of my eyes over the talking points about how SpaceX was decades ahead of everyone else, SpaceX is a leader in cheap reusable spacecraft, iterative development is great, etc.

I suspect that part of the problem is that there is company in there that’s doing a pretty amazing job of reusable rocketry at lower prices than everyone else under the guidance of a skilled leader who is also technically competent, except that leader is gwynne shotwell who is ultimately beholden to an idiot manchild who wants his flying cybertruck just the way he imagines it, and cannot be gainsayed.

This would be worrying if there was any risk at all that the stuff Anthropic is pumping out is an existential threat to humanity. There isn’t so this is just rats learning how the world works outside the blog bubble.

I mean, I assume the bigger the pump the bubble the bigger the burst, but at this point the rationalists aren’t really so relevant anymore, they served their role in early incubation.

/r/SneerClub discusses MIRI financials and how Yud ended up getting paid $600K per year from their cache.

Malo Bourgon, MIRI CEO, makes a cameo in the comments to discuss Ziz’s claims about SA payoffs and how he thinks Yud’s salary (the equivalent of like 150.000 malaria vaccines) is defensible for reasons that definitely exist, but they live in Canada, you can’t see them.

Guy does a terrible job explaining literally anything. Why, when trying to explain all the SA based drama, does he choose to create an analogy where the former employee is heavily implied to have murdered his wife?

S/o to cinnaverses for mixing it up in there.

Cinnas

The Rolling Stone article is a bit odd (it appears to tell the story of the ex-employee who created Miricult twice, the first time without names and the second naming the accuser) but I trust them that MIRI did pay the accuser. Rolling Stone are a serious news organization which can be sued.

Yeah, I think Rolling Stone was worried about getting sued and omitted Helm’s name in the first draft (or something like that).

I know who the alleged victim was, and I think there probably was a crime and blackmail payments but the alleged victim didn’t want to come forward for a number of reasons (among other things, he’s still part of the rationalist community and has faced a lot of harassment from the public after an unrelated newspaper article outed him as being trans). I’d also point out that the only person that miricult directly accused of statutory rape was one of Yudkowsky’s employees rather than Yudkowsky himself. That being said, the journalist who wrote the Rolling Stone article claims she got a copy of the police report Helm filed and only Yudkowsky was named.

Even if miricult was total bullshit I’m confident that the alleged victim was lying about not being exploited by other rationalists; a few years later he and a couple of other people posted accounts of being sexually abused by a rationalist (unrelated to miricult) and it led to the abuser being ostracized from the rationalist community.

Anyways I know a lot more about this but I’d rather not discuss the details on a publicly viewable forum to protect the privacy of the people involved.

I agree that its gross to discuss a lot of this in public, and that underage sex is often an ethical grey area. I had no idea that the person who accused BD of pushing him into substance use and extreme BDSM scenarios is also the person who allegedly had sex underage with a MIRI staffer while living in a Rationalist group home.

Ziz’s blog had posts that revealed his identity and mentioned some of the BD stuff, once I found them it was just a matter of putting two and two together, so to speak

“Nah, salary stuff is private”, starting to think this sort of stuff is an idea introduced to protect capital and nobody else.

I was teasing this out in my head to try come up with a good sneer. First thought: for an organisation that tries to appeal to EAs, you’d think that they would do a good job of being transparent about why so much money is being spent on someone with such low output. But immediate rebuttal: the whole point of the TESCREAL cult shit is that yud get free tuocs because he’s the chosen one to solve alignment.

Was thinking more about how the radical, dont fall to biasses think for yourself and cone here to really learn to think (so we can stop the paperclipmachine and resurrect the dead) defend a half million dollars salary with a ‘thats private’.

But that is the same conclusion. The prophet must be protected.

Damn, I missed this and now the comment is deleted. Do you happen to remember what he said?

I believe he was trying to explain why it looked like MIRI had paid money out to an alleged sexual abuser. The analogy was constructed something like this:

- A and B work at a company C

- A has conflict with B.

- C decides to fire B.

- unrelated to 1, 2, or 3, B has a wife D, who dies in mysterious circumstances, leading A to strongly believe that B killed D.

- The police, E, perform an investigation and decide not to pursue a case against B

- C pays out B’s severance, unrelated to 2, 4, or 5.

Don’t blame me or how I remembered this if this doesn’t make sense.

Additionally he said something to the effect of I don’t blame you for not knowing this, it wasn’t effectively communicated to the media like it’s no big deal, which isn’t really helping to beat the allegations of don’t ask don’t tell policies about SA in rat related orgs.

Can confirm. This was like if the pope walked into an r/atheism meetup and showed his texts saying “dw bro, I’ll just move you to a different diocese, btw this totally isn’t about the allegations wink wink”

New preprint just dropped, and noticed some seemingly pro AI people talk about it and conclude that people who have more success with genAI have better empathy, are more social and have theory of mind. (I will not put those random people on blast, I also have not read the paper itself (aka, I didn’t do the minimum actually required research so be warned), just wanted to give people a heads up on it).

But yes, that does describe the AI pushers, social people who have good empathy and theory of mind. (Also, ow got genAI runs on fairy rules, you just gotta believe it is real (I’m joking a bit here, it is prob fine, as it helps that you understand where a model is coming from and you realize its limitations it helps, and the research seems to be talking about humans + genAI vs just genAI)).

So, I’m not an expert study-reader or anything, but it looks like they took some questions from the MMLU, modified it in some unspecified way and put it into 3 categories (AI, human, AI-human), and after accounting for skill, determined that people with higher theory of mind had a slightly better outcome than people with lower theory of mind. They determined this based on what the people being tested wrote to the AI, but what they wrote isn’t in the study.

What they didn’t do is state that people with higher theory of mind are more likely to use AI or anything like that. The study also doesn’t mention empathy at all, though I guess it could be inferred.Not that any of that actually matters because how they determined how much “theory of mind” each person had was to ask Gemini 2.5 and GPT-4o.

The empathy bit was added by people talking about the study, sorry if that wasn’t clear.